Artificial intelligence is shifting from passive language models to agents—systems capable of taking meaningful actions in the real world. These agents need a reliable way to interact with business applications, structured data, internal tools and APIs. Until recently, every organization had to build custom adapters and integrations for each system, creating a fragmented, costly and often insecure architecture.

The Model Context Protocol (MCP) was created to solve exactly this problem. It is emerging as an open, universal standard that allows AI agents to discover tools, understand capabilities, and perform actions through a consistent and secure interface. For enterprises preparing to operationalize AI, MCP represents the next foundational layer of modern software architecture.

Why MCP Matters Now

Before MCP, integrating an AI agent with an external system required bespoke engineering. Each API had its own naming conventions, its own parameters, its own authentication flow, and its own unpredictable response patterns. A single agent connecting to ten different systems required ten separate adapters. Scale this to dozens of tools across an enterprise, and integration becomes a bottleneck that slows innovation and increases risk.

MCP addresses this by offering a shared language between AI systems and the software they need to interact with. It reduces integration overhead, improves consistency, and allows organizations to build agentic capabilities on top of a predictable foundation rather than a patchwork of custom connections.

The timing is critical: as AI adoption accelerates and agents become more capable, the need for unified, secure, and extensible integration has become unavoidable.

What MCP Is: The Essence of the Standard

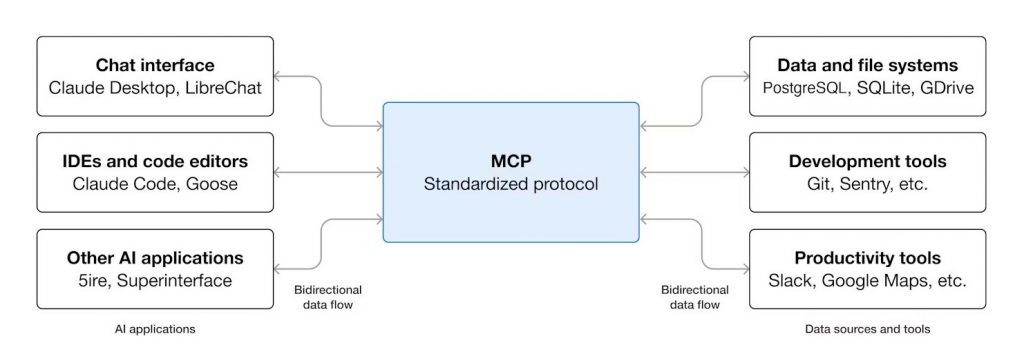

The Model Context Protocol is best understood as a bridge—a universal way for AI systems to connect with real-world applications. It defines how agents can discover what actions are available, what data structures they can access, and how they should interact with different environments. Unlike traditional APIs, which were designed for applications, MCP is designed specifically for AI systems and the fluid, context-driven behaviors they require.

MCP servers expose tools, resources and prompts through a declarative configuration. When an AI client connects, it automatically learns what the server can do, how to call those capabilities, and what constraints apply. Instead of relying on custom scripts or manual API interpretation, the agent operates based on a structured, standardized description of the environment.

This shift—from bespoke integrations to standardized capability discovery—marks a significant advancement in AI engineering.

How MCP Works: Architecture & Flow

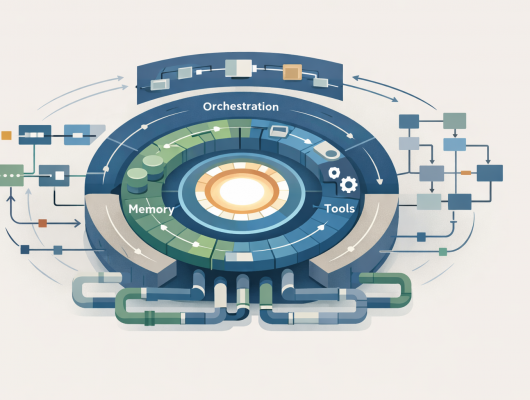

MCP follows a clean and extensible architecture built around three main components: the host, the client, and the server. The host is the application the user interacts with—for example, Claude, ChatGPT, VS Code or a corporate AI interface. Inside the host lives the MCP client, which connects to one or more MCP servers. These servers represent the systems an agent can interact with: databases, file systems, business applications, or internal services.

Communication between client and server is handled through JSON-RPC, transported via STDIO or HTTP depending on whether the integration is local or remote. MCP servers define their capabilities in structured JSON, exposing:

- tools, which represent actions the agent can perform;

- resources, which represent data the agent can retrieve;

- prompts, which help guide complex workflows.

When the AI model connects, it automatically discovers everything the server can do—no guessing, no reverse engineering, no handcrafted logic. This is what makes MCP especially powerful for systems that rely on contextual reasoning and dynamic decision-making.Model Context Protocol diagram explaining MCP architecture.

Credit: modelcontextprotocol.io

MCP vs Traditional APIs: A Shift in Integration Philosophy

Traditional APIs were never designed for agentic AI. They require strict endpoints, rigid schemas, and deterministic inputs—constraints that AI systems frequently violate. More importantly, APIs vary dramatically from one service to another. Even two airline booking APIs may use completely different fields to represent identical concepts.

MCP provides a uniform structure that abstracts these differences away. Instead of forcing developers to build ten different adapters for ten systems, MCP allows tools to be described in a consistent format that any agent can understand. This lowers integration cost, accelerates deployment, and creates a stable environment where agents can execute tasks without ambiguity.

Rather than replacing APIs, MCP orchestrates them—offering a standardized surface that sits between agents and the existing system landscape.

What Model Context Protocol Enables Inside an Enterprise

How the Model Context Protocol Changes Enterprise AI Integration

The practical impact of MCP becomes clear once organizations begin enabling their internal AI agents to interact with real systems. Agents can generate reports using live ERP data, update customer records, extract insights from document repositories, query SQL databases, review compliance policies, open support tickets, analyze logs, or draft operational summaries.

This bridges the gap between purely generative AI and operational AI. Where traditional AI answers questions, MCP-enabled agents complete tasks. They do not merely inform decisions; they execute workflows.

For enterprise teams, this means copilots can be built not only for content creation, but for HR, finance, operations, security, customer support, compliance, and engineering. Model Context Protocol becomes the foundation enabling AI to act rather than simply respond.

Security & Governance: The Real Considerations

Although Model Context Protocol brings structure and predictability, it does not magically solve security. It is deliberately lightweight: authentication, authorization, and validation must be implemented by developers and risk teams.

This is not a weakness—it is intentional. MCP provides the protocol; organizations provide the policy.

Key governance concerns include:

- ensuring tools follow least-privilege design,

- validating all agent inputs before execution,

- isolating sensitive capabilities,

- enforcing strict role-based access controls,

- auditing agent actions,

- securing all transport channels.

Microsoft’s recent announcement that Windows 11 will include native MCP security controls is a significant milestone. It signals that MCP is evolving from a developer-oriented concept to a core building block of the operating system, enabling safer agentic automation at scale.

Industry Adoption: A Growing Standard

Anthropic created MCP and integrated it deeply into the Claude ecosystem. Microsoft is now adopting it across Windows 11, Copilot, and internal agentic frameworks. As more platforms begin supporting MCP servers—GitHub, Slack, Drive, Notion and others—an ecosystem is emerging where AI can interact with software systems through consistent, predictable interfaces.

This adoption matters: standards become standards when industry leaders support them. MCP is rapidly transitioning from a promising concept to a widely adopted integration layer.

Implementing MCP: A Practical Guide for Teams

Deploying Model Context Protocol begins with identifying meaningful workflows: where agents need to fetch information, perform actions or orchestrate tasks. From there, teams can use Python or TypeScript SDKs to build MCP servers, define capabilities and apply their organization’s security policies.

The implementation process follows a clear sequence:

- Select the workflows or systems where MCP will add immediate value.

- Build an MCP server and define tools, resources and prompts.

- Apply governance: access rules, auditing, validation, and secure transport.

- Connect the server to MCP-enabled clients like Claude Desktop or VS Code.

- Test—iteratively—how agents interpret and execute capabilities.

- Deploy in phases to prevent unsafe tool usage or unbounded agent actions.

This creates a sustainable pattern for future integrations and reduces the long-term complexity typically associated with AI automation.

Limitations & Open Questions

MCP is still early. It is not yet a universal standard like HTTP, and it does not enforce a full security model. Automatic inference of backend context remains limited by the configuration provided. Whether MCP becomes mandatory for future AI ecosystems is unclear, but momentum suggests it will become a de facto layer for agentic interaction.

These questions will evolve as Model Context Protocol matures—and as operating systems and cloud providers adopt it more deeply.

Why MCP Represents a Strategic Advantage

For leaders building AI capabilities, MCP is more than a protocol. It is an architectural decision. It reduces integration costs, unifies tool access, supports stronger governance, accelerates delivery, and enables AI to operate with real-world data and applications. As enterprises move from experimentation to scaled automation, MCP becomes a foundation for sustainable, secure, and reliable agent systems.

How ConAIs Supports MCP Adoption

ConAIs helps organizations design, develop and govern MCP infrastructure. We support end-to-end deployment—from MCP server creation to tool mapping, from security controls to operational integration. Our goal is to ensure MCP is not only implemented, but implemented safely, strategically, and in alignment with a company’s long-term AI roadmap.

Conclusion

The Model Context Protocol is becoming the connective tissue of the agent era. It provides the structure that AI has been missing—a universal layer through which models can understand capabilities, take actions, and operate inside complex enterprise environments. With MCP gaining adoption from Anthropic to Microsoft, it is rapidly transforming into a foundational standard for the next decade of AI.

Organizations that embrace MCP now will be better positioned to build powerful, secure and scalable agentic systems that unlock real operational value.

You can also explore our recent article on data sovereignty to understand how governance intersects with agentic AI.

![]()

1 Comment