Generative AI has dramatically lowered the cost of creating content. Text, images, audio, and video can now be produced in seconds, at near-zero marginal cost, and at unprecedented scale. This shift has unlocked genuine productivity gains, but it has also introduced a new and increasingly visible phenomenon: AI slop.

AI slop refers to low- to mid-quality AI-generated content that floods digital channels without sufficient regard for accuracy, usefulness, or intent. It is not always false. It is not always malicious. But it is overwhelmingly noisy. And as generative systems scale faster than governance, AI slop is becoming a structural problem for platforms, organizations, and decision-makers alike.

This is not a fringe internet issue. AI slop is now a leadership concern.

What Is AI Slop?

AI slop is best understood as the byproduct of scale without stewardship. It includes AI-generated text, images, video, and audio that are created quickly, cheaply, and in large volumes, often optimized for engagement rather than value. Much of it looks plausible at first glance. Some of it is entertaining. Some of it is misleading. Most of it is simply disposable.

What makes AI slop distinct from earlier forms of low-quality content is not just volume, but velocity. Generative systems can produce more content in a day than human creators could generate in months. When incentives favor speed and attention, slop becomes the default output.

Importantly, AI slop is not defined by whether content is “good” or “bad” in an aesthetic sense. It is defined by misalignment: content created without a clear purpose, accountability, or feedback loop, but still amplified by algorithms and workflows.

Why AI Slop Is Spreading Faster Than Quality

The rise of AI slop is not a failure of models. It is a failure of systems.

Several forces converge here. First, the economics of content creation have flipped. When production is cheap and distribution is automated, the cost of publishing low-value material approaches zero. Second, attention-based platforms reward frequency and novelty more reliably than depth or rigor. Third, many organizations deploy generative AI tactically, without rethinking incentives, review mechanisms, or success metrics.

As a result, generative AI often scales output faster than it scales judgment. Systems optimize for “can we generate this?” rather than “should we generate this?” or “who is accountable for its impact?”

This is why AI slop is not limited to social media feeds. It appears in marketing copy, internal documentation, SEO-driven articles, synthetic reviews, training data, and even knowledge bases that were once carefully curated.

AI Slop Is Not a Model Problem

A common reaction to AI slop is to assume that better models will solve it. Higher accuracy. Better reasoning. More guardrails. These improvements matter, but they miss the core issue.

AI slop emerges upstream of model quality. It is produced when organizations deploy generative tools without clear intent, governance, or evaluation. A highly capable model placed inside a poorly designed workflow will still generate slop, only faster and more convincingly.

This is why simply upgrading models does not reverse the trend. Without structural changes, better models often accelerate the problem by making low-quality output more scalable and harder to distinguish from meaningful content.

The uncomfortable truth is this: AI slop is a systems design failure, not a technological limitation.

The Hidden Cost of AI Slop for Organizations

For leaders, the real danger of AI slop is not embarrassment. It is erosion.

Unchecked AI slop slowly degrades trust. Internally, teams become less confident in AI-assisted outputs, increasing review burden and decision fatigue. Externally, brands risk becoming interchangeable, indistinguishable, or unreliable as automated content crowds out clarity and originality.

There is also a compounding effect on knowledge systems. When low-quality AI-generated material enters documentation, wikis, or training corpora, it contaminates future outputs. Feedback loops weaken. Signal-to-noise ratios collapse. Over time, organizations lose the ability to tell whether their systems are informing decisions or merely filling space.

Perhaps most critically, AI slop blurs accountability. When “the system generated it” becomes an acceptable explanation, ownership dissolves. That is a leadership problem, not a technical one.

Why Platforms Are Already Shifting Toward Authenticity Signals

Major platforms are beginning to acknowledge that the slop problem cannot be solved purely through detection. As generative content becomes more realistic, distinguishing real from synthetic grows harder, not easier.

This has prompted a subtle but important shift. Rather than trying only to identify fake content, platforms are exploring ways to signal authenticity. Originality, provenance, creator credibility, and contextual trust are becoming more valuable than polished perfection.

In a world where anything can be generated, imperfection becomes a signal. So does transparency. So does intent.

This shift matters for organizations. It suggests that the future is not about producing more AI content, but about producing fewer, more intentional, and more accountable AI-assisted outputs.

From Slop to Signal: Designing AI Systems That Matter

Avoiding AI slop does not mean avoiding generative AI. It means designing systems where generation is constrained by purpose.

Organizations that move beyond slop tend to share several characteristics. They define where AI is allowed to generate autonomously and where human oversight is mandatory. They tie outputs to business outcomes rather than engagement metrics alone. They treat feedback as a first-class input, not an afterthought. And they design AI systems as part of broader workflows, not as isolated content machines.

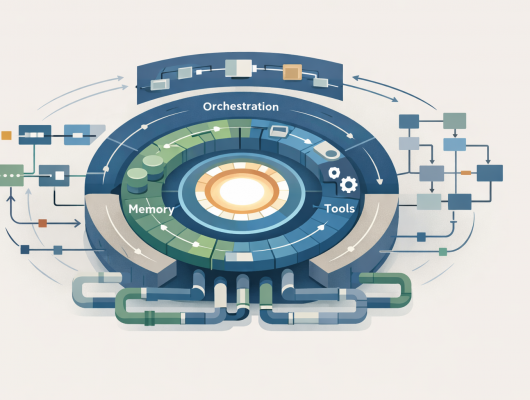

This is also where agentic thinking becomes relevant. As AI systems begin to act, not just generate, the cost of noise rises sharply. Autonomous systems amplify whatever intent they are given. Without clarity, they will scale confusion.

In practice, moving from AI slop to signal requires more than content guidelines. It requires architectural clarity. As AI systems evolve from generators to actors, organizations need standardized ways to define what models can access, how they interpret context, and which actions they are allowed to take.

This is where emerging standards like the Model Context Protocol (MCP) become critical. By formalizing how AI systems discover tools, data, and capabilities, MCP helps reduce uncontrolled generation and replaces ad-hoc integrations with intentional, governed execution. In other words, it creates the conditions where AI outputs are grounded in context rather than noise.

What Leaders Should Do Next

AI slop is not an inevitable side effect of progress. It is a signal that generative capabilities have outpaced organizational readiness.

Leaders do not need to ban AI-generated content. They need to reassert authorship, intent, and accountability within AI-enabled systems. That means asking harder questions: Why are we generating this? Who owns its outcome? How do we know it adds value?

The organizations that win in the next phase of AI adoption will not be those that generate the most content, but those that generate the most meaning. They will treat generative AI as an amplifier of strategy, not a substitute for it.

At ConAIs, we see AI slop as a warning sign. It tells us where systems lack governance, where incentives are misaligned, and where scale has outpaced judgment. Addressing it is not about aesthetics. It is about leadership.

If your AI systems are producing more noise than signal, the problem is not the model. It is the design.

![]()