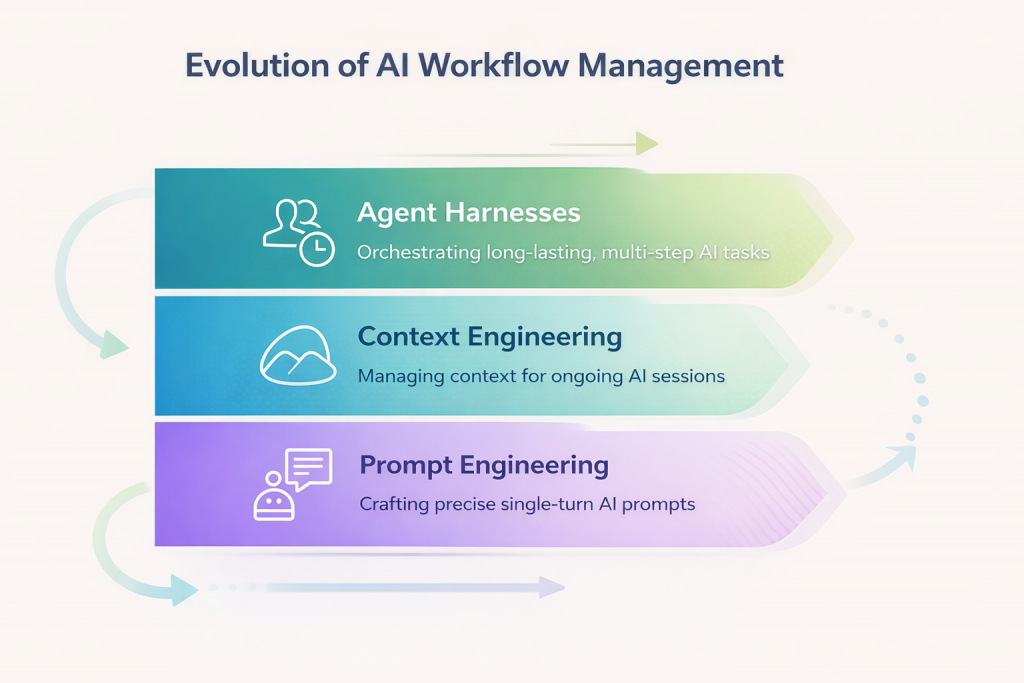

For a long time, progress in artificial intelligence was almost entirely framed through models. Which model was larger, which one scored higher on benchmarks, which one reasoned more fluently. This framing made sense when AI systems were largely confined to single-turn interactions and short-lived tasks.

That context no longer exists.

Today’s AI agents are expected to operate across tools, data sources, and time horizons that extend well beyond a single prompt. They are asked to plan, execute, verify, and persist. In these conditions, intelligence alone is not the limiting factor. Reliability is.

What ultimately determines whether an agent succeeds is not how smart the model is in isolation, but how well the surrounding system supports it during long-running, real-world work. This is where the concept of the agent harness becomes critical.

Why Models Alone Are No Longer Enough

As AI systems move from assistance toward execution, a fundamental gap becomes visible. Even highly capable models struggle to maintain coherence across dozens of steps, multiple tool calls, or sessions that span hours or days. Instructions degrade, context fragments, and progress stalls.

Benchmarks rarely capture this failure mode. They reward short-term reasoning performance, not durability. A model that performs exceptionally well in a controlled evaluation can still fail catastrophically when deployed into a complex workflow.

In practice, the first thing that breaks in production is not reasoning ability. It is continuity.

What Is an Agent Harness?

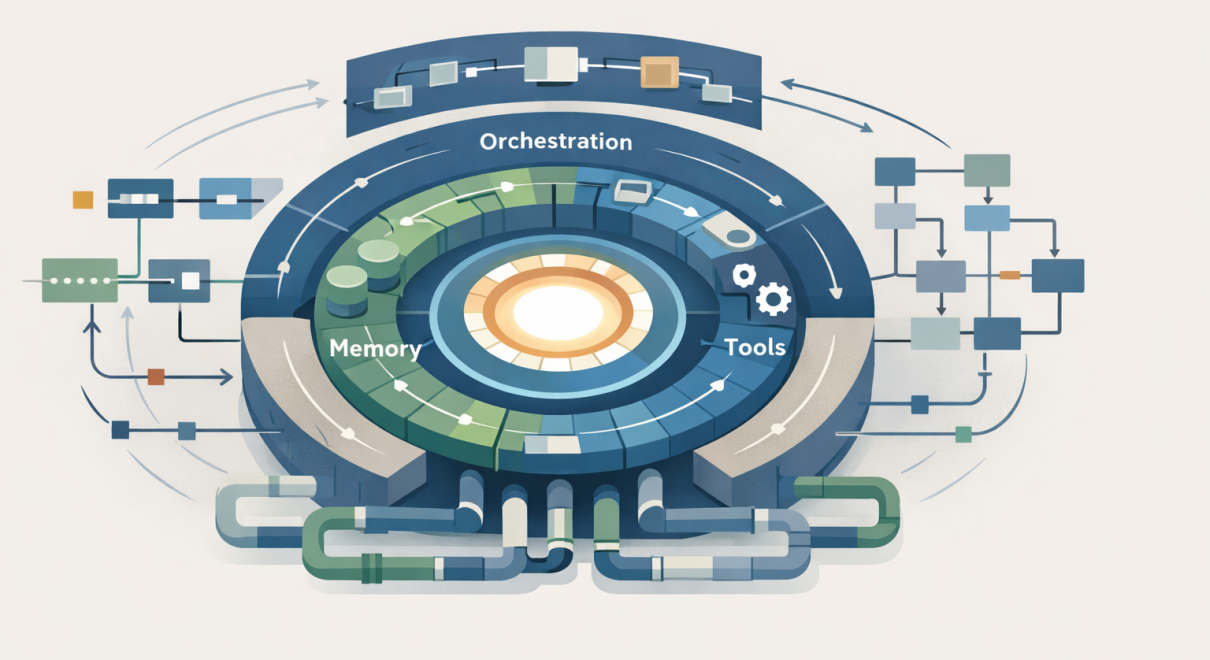

An agent harness is the software infrastructure that governs how an AI agent operates over time. It is not the model itself, and it is not the agent’s logic. Instead, it is the system that wraps around the model and manages everything the model cannot do on its own.

This includes maintaining state across sessions, enabling and supervising tool usage, curating context under token constraints, enforcing guardrails, and verifying outputs before they propagate downstream.

A useful mental model is to think of the agent harness as an operating system. The model provides raw cognitive capacity, but the harness is what turns that capacity into reliable execution.

Why Agent Harnesses Emerged

Agent harnesses did not emerge because models stopped improving. They emerged because expectations changed.

Once AI systems began interacting with external environments—filesystems, APIs, databases, and user interfaces—the limitations of prompt-only designs became unavoidable. Models needed memory beyond a single context window. They needed a way to act in the world. They needed structure to prevent one-shot failure modes.

Without an external system to manage these concerns, even advanced agents repeatedly failed to make sustained progress. Harnesses emerged as the missing layer that binds reasoning, action, and memory into a coherent loop.

How an Agent Harness Works in Practice

At runtime, the harness sits between the user, the model, and the external environment. When a task begins, the harness captures intent and initializes the working state. As the model reasons, the harness interprets structured outputs as tool requests and executes them safely. Results are then injected back into the model’s context so reasoning can continue.

Throughout this process, the harness decides what information remains in working memory, what gets summarized, and what is retrieved when needed. It also performs validation, prompting corrections when outputs fail to meet defined criteria.

When a session ends, the harness ensures that progress does not disappear with the context window. Artifacts, logs, and state are persisted so work can resume later without starting from scratch.

Core Responsibilities of an Agent Harness

Despite differences in implementation, most agent harnesses converge on the same set of responsibilities. They manage memory and state, integrate tools in a controlled way, engineer context dynamically, guide planning and task decomposition, and enforce verification and safety constraints.

These are not auxiliary features. They are the mechanisms that turn an AI model into an operational system.

Harnesses, Orchestrators, and Frameworks

It is useful to distinguish an agent harness from related concepts that are often conflated.

Frameworks provide abstractions and building blocks for constructing agents. Orchestrators define control flow and decision logic. The harness, by contrast, is the runtime environment that makes those abstractions usable at scale.

Frameworks help you assemble components. Orchestrators decide what happens next. Harnesses ensure that whatever happens next can happen safely, repeatedly, and over time.

Why Agent Harnesses Matter Now

As AI agents enter production environments, reliability becomes the defining constraint. Organizations need agents that can persist, recover from errors, and operate under governance constraints. These requirements cannot be solved at the prompt level.

They are architectural problems, and the harness is where those problems are addressed.

In practice, two systems using the same underlying model can perform radically differently depending on the quality of their harness. This is why harness engineering is becoming as important as model selection.

The Agent Harness and the Bitter Lesson

There is also a strategic lesson emerging. As models improve rapidly, rigid control logic becomes fragile. What required complex pipelines a year ago may collapse into a single prompt tomorrow.

Effective harnesses therefore prioritize adaptability over cleverness. They are designed to be lightweight, modular, and easy to revise. Over-engineering the harness itself risks locking assumptions in place that future models will invalidate.

In the agentic era, systems must be built to change.

Harnesses as Feedback Engines

Beyond execution, harnesses generate structured data about how agents behave over time. Failures, retries, late-stage drift, and recovery paths all become measurable signals. This data is far more representative of real-world performance than static benchmarks.

As training and inference environments begin to converge, harnesses are likely to play a central role in feeding execution data back into model and system improvement.

In that sense, the harness becomes more than infrastructure. It becomes a learning surface.

From Agent Harness to Agentic Systems

As organizations adopt agentic AI, the critical questions shift. The focus moves away from which model is strongest and toward whether agents can be trusted to operate continuously, recover gracefully, and remain controllable.

Those questions are answered at the harness level.

And as emerging standards like the Model Context Protocol (MCP) define how agents discover tools, interpret context, and expose capabilities in a standardized way, the harness becomes the natural enforcement point for interoperability and governance.

Agent harnesses are no longer an implementation detail. They are the control layer of the agentic future.

![]()