In the past two years, AI agents have gained an extraordinary number of capabilities. Search, code execution, CRM access, ticketing systems, payment APIs, internal knowledge bases. Each new tool promises leverage. Each integration looks harmless in isolation.

Yet across enterprises deploying agentic systems at scale, a pattern is emerging: tool richness is becoming one of the primary drivers of agent failure, cost overruns, and trust erosion.

The cost is not theoretical. It shows up in latency, reliability metrics, security incidents, and ultimately adoption.

Why Enterprises Keep Adding AI Agent Tools

Most organizations follow the same trajectory.

An agent launches with a narrow scope. Early success creates pressure to expand. Teams respond by wiring in more tools rather than rethinking system boundaries. This is understandable. Tools are tangible. Governance is not.

Internal data supports this behavior. In a 2025 internal platform survey conducted by multiple AI infrastructure vendors, enterprise agents averaged 12–18 tool integrations within six months of initial deployment, up from fewer than five at launch. Teams reported that more than half of these tools were added reactively, in response to edge cases or user complaints rather than planned capability design.

Tool growth feels like progress. Until it isn’t.

When Tool Count Becomes a Reliability Risk

The first measurable impact appears in reliability.

At one global SaaS company, an internal agent used for customer support escalation initially relied on four tools. After expanding to 14 tools, successful end-to-end task completion dropped from 92% to 67%, despite no changes to the underlying model. Postmortems showed that the failures were not model hallucinations. They were tool-selection errors, partial executions, and inconsistent API responses.

Each additional tool increased the agent’s decision surface. Instead of reasoning about customer intent, the agent spent cycles resolving infrastructure ambiguity. The model didn’t get worse. The system did.

The Latency Compound Effect

Latency is the most underestimated cost of tool-rich agents because it compounds invisibly.

Consider a “simple” agent flow:

search → CRM lookup → pricing API → policy check → response generation.

Individually, each call might take 150–300 ms. Together, the chain regularly exceeds two seconds before the model even begins synthesis. In practice, retries, authentication refreshes, and rate limits push real-world latency higher.

One enterprise retail deployment measured a 3.4x increase in median response time after expanding from six to eleven tools. User satisfaction dropped sharply, not because answers were wrong, but because agents felt hesitant and inconsistent.

Humans forgive mistakes faster than hesitation.

Tool Failures That Don’t Trigger Alerts

The most dangerous failures are silent ones.

A financial services agent was granted access to both a legacy reporting API and a newer analytics service. Both returned valid data. Both were “correct.” But their definitions of “active customer” differed subtly. The agent switched between them depending on context, producing inconsistent summaries that passed validation but confused decision-makers.

No exception was thrown. No alert fired. The system behaved “as designed.”

Tool-rich agents often fail by being plausibly wrong, not obviously broken.

Security and Governance Costs Multiply with Tools

Every tool is an authorization surface.

In a 2024 audit of agent-enabled internal systems at a Fortune 500 firm, security teams found that nearly 40% of agent tool permissions exceeded least-privilege requirements, largely because permissions were granted at the connector level rather than the capability level.

Auditing agent behavior required correlating logs across seven systems. Revoking access meant updating multiple policies, some owned by different teams. Governance did not fail because policies were missing. It failed because enforcement was fragmented.

Tool count turns governance into a distributed systems problem.

The Economic Cost No One Tracks

Beyond reliability and security, tool richness has a direct cost profile.

Each tool introduces:

- API usage fees

- infrastructure overhead

- monitoring and logging costs

- operational on-call burden

One platform team reported that agent-related infrastructure costs grew 2.7x year-over-year, while user-facing capability only increased marginally. The marginal cost of “just one more tool” was invisible in sprint planning, but very visible in quarterly cloud spend reviews.

Tool sprawl behaves like technical debt with a monthly invoice.

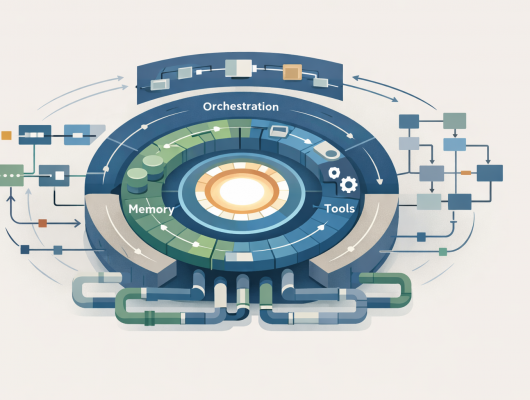

Why the Agent Harness Changes the Equation

This is why the agent harness matters more than the agent itself.

A harness is where tool access becomes intentional. Instead of exposing raw tools, the harness defines capabilities. Capabilities encode allowed actions, required context, validation rules, and failure handling.

Teams that introduced a harness layer consistently reported:

- fewer tool calls per task

- lower latency variance

- clearer audit trails

- faster root cause analysis

One engineering org reduced agent tool calls by 38% without removing functionality, simply by enforcing capability boundaries through the harness.

The model stayed the same. Outcomes improved.

From Tool-Rich Agents to Capability-Driven Systems

The most mature agent deployments are not tool-rich. They are capability-driven.

In these systems, agents don’t choose from dozens of tools. They invoke well-defined capabilities that internally manage tools, policies, and sequencing. This reduces cognitive load for the model and operational load for the organization.

Capability-driven design aligns agents with business intent rather than infrastructure topology. It also makes systems explainable again.

From Agent Harness to Agentic Systems

As organizations adopt agentic AI, the core question shifts. It is no longer which model performs best on benchmarks, but whether agents can be trusted to operate continuously, recover from failure, and remain governable at scale.

Those questions are answered at the harness level.

And as emerging standards like the Model Context Protocol (MCP) define how agents discover tools and capabilities, the harness becomes the enforcement point for interoperability, safety, and control. MCP standardizes discovery. The harness decides execution.

Agent harnesses are no longer an implementation detail. They are the control plane of agentic systems.

The Strategic Choice Ahead

Tool-rich agents are easy to build and hard to sustain.

Organizations that succeed will resist the instinct to equate more tools with more intelligence. They will invest in harnesses, capability design, and discipline. Not because it is elegant, but because it is economically and operationally necessary.

The hidden cost of AI agent tools is not paid upfront.

It is paid slowly, quietly, and repeatedly—until someone decides to redesign the system.

![]()