For years, progress in artificial intelligence was framed almost entirely around intelligence. Smarter models, higher benchmark scores, stronger reasoning, longer context windows. The implicit assumption was simple: if we keep increasing model capability, useful AI systems will naturally follow.

That assumption is now breaking down.

As organizations move from isolated model calls toward agentic systems that plan, act, and operate continuously, a different constraint has become visible. Intelligence alone does not determine whether an agent succeeds. Context does.

This is where the idea of Context Quotient (CQ) becomes unavoidable.

The Limits of Intelligence-Centric AI

Benchmark-driven progress made sense when AI systems were primarily answering questions or generating text in isolation. In those settings, raw reasoning power often correlated with output quality.

Agentic systems change the rules.

Agents are expected to take actions, sequence tasks, interact with tools, respect constraints, and adapt over time. In this environment, the difference between a correct answer and a useful outcome is rarely about reasoning alone. It is about whether the agent understands the situation it is operating in.

Highly capable models regularly fail not because they are “not smart enough,” but because they lack awareness of prior decisions, organizational constraints, historical exceptions, or implicit rules that are never written down.

From One-Shot Models to Agentic Systems

Modern agents are no longer stateless interfaces. They persist across interactions, coordinate multiple tools, and operate in environments shaped by business logic rather than abstract tasks.

This transition exposes a critical gap. While model intelligence scales through training and compute, context does not automatically scale. It must be captured, structured, maintained, and delivered at the right moment.

Without this, agents revert to confident guesswork, even when powered by the most advanced models available.

What Context Quotient Really Means

Context Quotient is not about how much data an agent has access to. It is about whether the agent has the right information at decision time.

High CQ means understanding why past decisions were made, not just what decisions were recorded. It includes knowledge of constraints, trade-offs, exceptions, and institutional memory that never appears in clean datasets.

This kind of context is rarely generic. It is deeply specific to an organization, a team, or even a single workflow. And it cannot be inferred reliably from surface-level data alone.

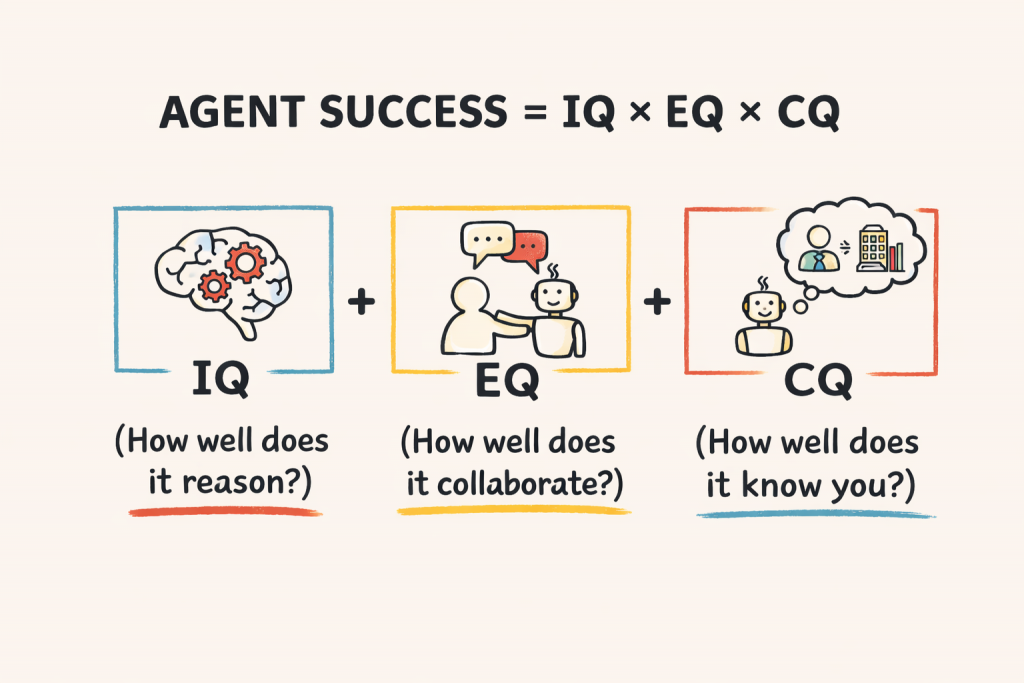

Why Context Is Multiplicative, Not Additive

Agent success is often framed as a sum of capabilities. In practice, it behaves more like a product.

An agent with exceptional reasoning but zero context produces results that are technically plausible yet operationally useless. Intelligence without context does not degrade gracefully. It collapses.

This is why CQ is best understood as a multiplier. When it approaches zero, overall system value approaches zero as well, regardless of how advanced the underlying model might be.

High-CQ Agents in Practice

In real-world deployments, the difference between low-CQ and high-CQ agents is stark.

A sales agent that only sees CRM fields will struggle to replicate outcomes that depend on unwritten pricing logic, historical concessions, or relationship-specific nuances. A support agent without access to resolved escalations or known workarounds will repeat mistakes already learned by the organization. A marketing agent that ignores prior campaign failures will optimize metrics while undermining trust.

These failures are not model errors. They are context failures.

The False Promise of Smarter Models

As frontier models converge in capability, the returns on marginal intelligence improvements diminish. A slightly stronger reasoning model does not compensate for missing context. In many cases, it amplifies the problem by producing more confident but equally misaligned outputs.

This is why organizations that continuously swap models without rethinking context strategy see little improvement. The bottleneck is no longer intelligence. It is alignment with reality.

Context as a Systems Problem, Not a Prompt Problem

Context cannot be solved with better prompts alone.

Sustained context requires memory systems, retrieval strategies, lifecycle management, and architectural discipline. It involves deciding what information persists, what decays, and what must be reintroduced at each decision point.

In other words, CQ is a systems problem. Treating it as a prompting exercise leads to brittle agents that degrade under real-world conditions.

Connecting Context Across Tools and Time

Enterprise context is fragmented by design. It lives across CRMs, analytics platforms, internal documents, ticketing systems, and human judgment.

High-CQ agents must reconcile these fragments over time. They must maintain continuity across sessions and adapt as organizational knowledge evolves. This requires deliberate orchestration rather than ad hoc tool access.

Without this, agents may appear functional in isolation while failing silently at scale.

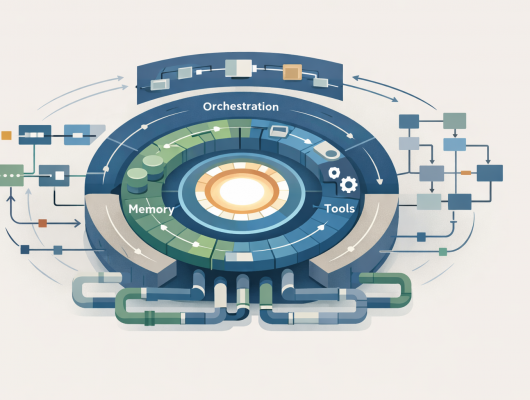

From Context to Control: The Role of Agent Architecture

This is where agent architecture becomes decisive.

Context must be governed, not merely retrieved. Agent harnesses, memory layers, and orchestration logic determine how context flows, how conflicts are resolved, and how failures are handled.

Emerging standards such as the Model Context Protocol (MCP) formalize how agents discover tools and capabilities, but the harness remains the layer that decides what context is exposed, when, and under what constraints. MCP standardizes discovery. Architecture enforces judgment.

Why Context Becomes a Strategic Advantage

As model intelligence commoditizes, context becomes the primary differentiator.

Organizations that capture and operationalize their institutional knowledge will deploy agents that feel grounded, reliable, and trustworthy. Those that do not will struggle with agents that sound impressive but fail quietly in production.

The CQ gap is widening, not shrinking.

The Question That Actually Matters

In the agentic era, asking whether an agent is intelligent enough is no longer sufficient.

The more important question is simpler and harder at the same time:

How well does this agent understand my world?

The agents that succeed will not be the ones with the highest benchmark scores, but the ones with the deepest, most durable context.

![]()