The Moment AI Agents Stopped Assisting and Started Acting

For years, AI assistants have been framed as productivity enhancers. They summarized, suggested, drafted, and occasionally impressed. But they rarely acted.

Clawdbot (now Moltbot) marks a subtle but important inflection point. Not because it is the most advanced AI system ever built, but because it treats autonomy as a default rather than an experiment. It does not merely respond to prompts. It executes decisions, interacts with real systems, and takes responsibility for outcomes.

This shift matters far beyond one viral open-source project. It signals a broader transition in how agentic AI is being designed, deployed, and evaluated.

What Is Clawdbot (Now Moltbot)?

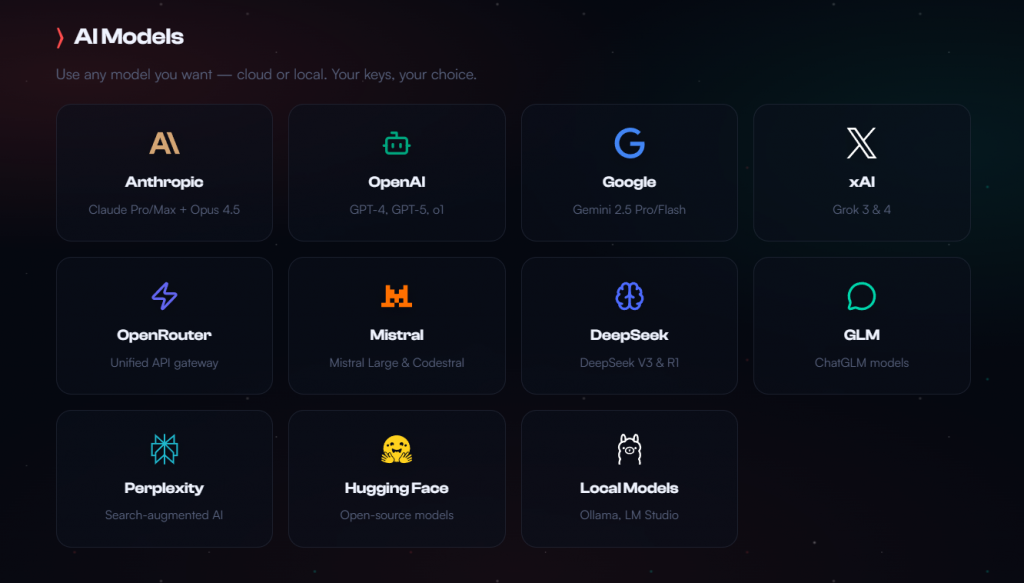

Clawdbot is an open-source, local-first personal AI agent built by Austrian developer Peter Steinberger. After a trademark challenge, it was renamed Moltbot, but its core philosophy remains unchanged.

The project describes itself as “the AI that actually does things.” In practice, that means an agent that can manage calendars, monitor inboxes, send messages across chat platforms, browse the web, fill forms, read and write files, and even execute shell commands.

Unlike most AI assistants, Moltbot runs on your own machine or server. Your data stays local. Your credentials stay under your control. The agent operates as part of your environment, not as a service abstracted behind a cloud interface.

Why Clawdbot Went Viral So Fast

Clawdbot’s rapid adoption was not driven by marketing. It spread because it resonated with a specific frustration shared by technically literate users.

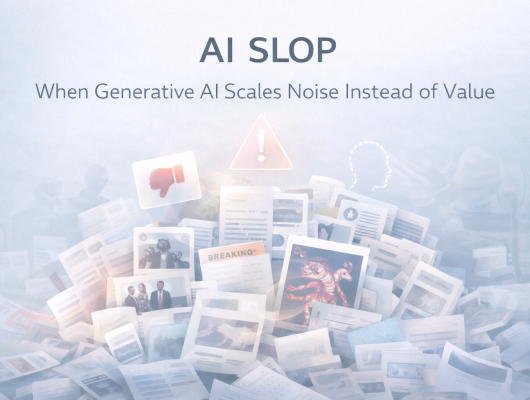

Many agentic AI projects over the past two years promised autonomy but delivered brittle demos. They looked impressive, but failed under real-world conditions. Clawdbot took a different path: it solved one person’s real problem first, then exposed that solution to others.

The now-famous “Mac Mini stacks” shared across social media are not about hardware fetishism. They are a visual metaphor. Developers are literally building small factories for autonomous agents, experimenting with what happens when AI is given persistence, memory, and authority.

This was less a product launch and more a cultural moment inside the builder community.

What Makes Clawdbot Different from Previous AI Assistants

Local Execution Instead of Cloud Dependency

Most AI assistants rely on remote execution. Even when they appear to act, the real work happens elsewhere. Clawdbot runs where the user runs. This eliminates an entire class of latency, privacy, and dependency concerns while introducing new design responsibilities.

Local execution also changes trust dynamics. The agent is no longer an opaque service. It becomes part of your system.

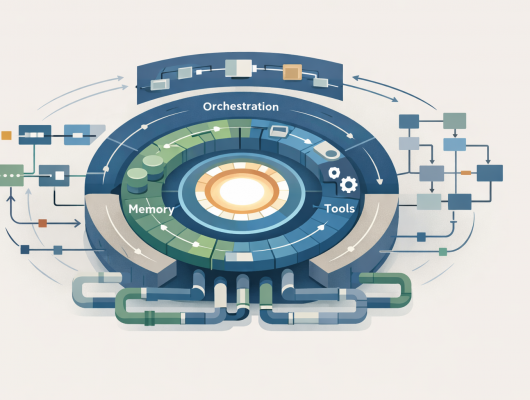

Persistent Memory as a First-Class Feature

Clawdbot is designed to remember. Not just chat history, but preferences, patterns, and ongoing context. This persistence allows the agent to behave less like a stateless tool and more like a collaborator that improves over time.

This is a critical distinction. Many so-called agents still reset cognitively between sessions. Clawdbot does not.

Real Tool and System Access

The most consequential difference is access. Clawdbot is not limited to curated APIs. It can interact with the operating system, the browser, messaging platforms, and files directly.

Its power does not come from a superior model. It comes from its position inside the user’s operational surface.

From Suggestions to Responsibility: The Real Shift

Most AI assistants optimize for plausibility. They generate responses that sound right. Clawdbot optimizes for execution.

This changes the nature of failure. When an agent only suggests, mistakes are cheap. When an agent acts, mistakes have consequences. Files change. Messages are sent. Systems are modified.

Clawdbot crosses the line from “assistant” to “authorized actor.” That line is where agentic AI stops being a UX problem and becomes a systems problem.

The Trade-Off: Autonomy vs Security

The very features that make Clawdbot compelling also make it risky.

An agent with shell access, browser control, and messaging integration is exposed to prompt injection, social engineering, and unintended command execution. A malicious message does not need to break encryption; it only needs to influence behavior.

Running locally and being open source improves transparency, but it does not eliminate risk. Safety becomes a function of setup discipline, sandboxing, and user awareness.

This is not a flaw unique to Clawdbot. It is a preview of the security model challenges that all autonomous agents will face.

Why Clawdbot Is Not a Consumer Product (Yet)

Despite the hype, Clawdbot is not ready for mass adoption. Safe usage currently requires technical competence, threat modeling awareness, and often a separate environment with limited privileges.

Ironically, this undermines its core promise as a “personal assistant.” The more useful it becomes, the more carefully it must be constrained.

This tension between utility and safety is not accidental. It is structural.

What Clawdbot Tells Us About the Future of Agentic AI

The Real Bottleneck Is Permission, Not Intelligence

Clawdbot does not outperform other assistants because it reasons better. It outperforms them because it is allowed to do more. Authority, not cognition, is the differentiator.

Security Can No Longer Be an Afterthought

Once agents act, security is no longer a compliance layer. It becomes a design primitive. Permissioning, isolation, and verification must be architected from day one.

Personal Agents Are a Precursor to Enterprise Systems

History suggests that personal productivity tools often foreshadow enterprise platforms. What starts with developers experimenting on their own machines typically evolves into standardized organizational infrastructure.

Clawdbot fits that pattern.

From Personal Agents to Enterprise Agent Architectures

As agents scale beyond individual users, the problem shifts from “what can this agent do?” to “under what conditions should it be allowed to act?”

This is where context becomes decisive.

An agent with broad access but shallow understanding is dangerous. An agent with deep contextual awareness can be constrained intelligently. This distinction is explored in more detail in Context Quotient: What Actually Makes AI Agents Useful at Scale, where the focus shifts from model capability to contextual alignment.

At enterprise scale, raw autonomy is not enough. Agents need structured context, governed execution paths, and clearly defined boundaries.

Clawdbot Is Not the Endgame. It’s the Signal.

Clawdbot is imperfect, risky, and intentionally unfinished. That is precisely why it matters.

It demonstrates that the question is no longer whether AI agents can act. They already can. The real question is whether our systems, security models, and organizations are ready to let them.

This transition will not be driven by bigger models or better prompts. It will be driven by architecture, context, and control.

Clawdbot does not represent the future of AI agents.

It reveals that the future has already started.

![]()